Part 8 of experiments in FreeBSD and Kubernetes: Building a Custom Cloud Image in CBSD

Table of Contents

- Great Image Bake-Off

- Cloud Seeding

- Great Image Bake-Off Round 2

- Cloud Seeding Round 2

- Great Image Bake-Off Finals

In the previous post in this series, I created a custom VM configuration so I could create Alpine Linux VMs in CBSD. That experiment went well. Next up was creating a cloud image for Alpine to allow completely automated configuration of the target VM. However, that plan hit some roadblocks and requires doing a deep dive into a new rabbit hole, documented in this post.

Great Image Bake-Off

I’m going to try to use my existing Alpine VM to install cloud-init from the edge branch (I have no idea whether it’s compatible, but I guess we will find out). The Alpine package tool, apk, doesn’t seem to support specifying packages for a different branch than the one installed, so I uncomment the edge repositories in the apk configuration.

I can’t easily test whether it will work with rebooting and then having to clean up the markers cloud-init leaves to maintain state across reboots so it doesn’t bootstrap more than once. I will just have to test in the new VM. (I also needed to run rc-update add cloud-init default in the VM before I shut it down, but more on that later.)

I can’t find any specific docs in CBSD on how they generate their cloud images, or even what the specific format is, although this doc implies that it’s a ZFS volume.

So, I look at the raw images in /usr/cbsd/src/iso.

Oh. They’re symbolic links to actually ZFS volume devices. Ok. I create the image directly from alpine1’s ZFS volume.

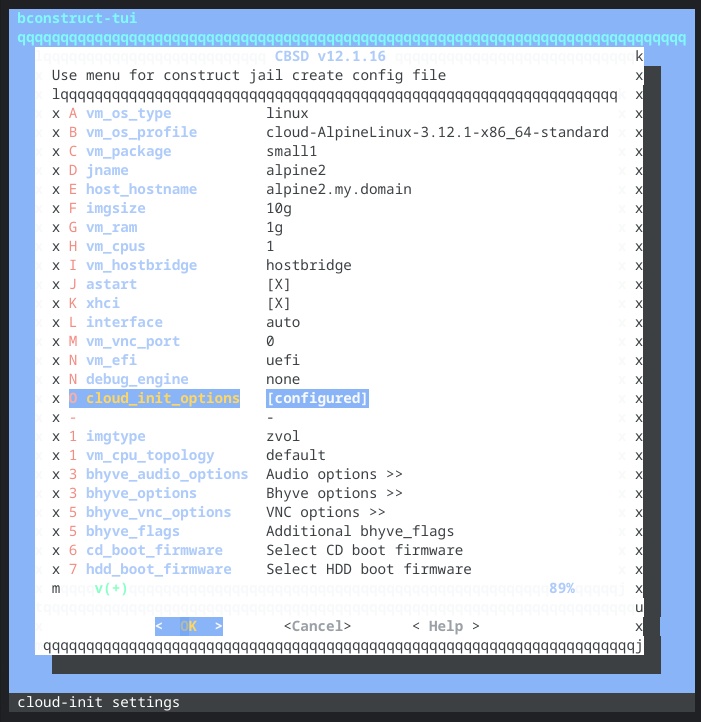

I left the raw file in ~cbsd/src/iso to see if CBSD would import it into ZFS automatically when I run cbsd bconstruct-tui.

Cloud Seeding

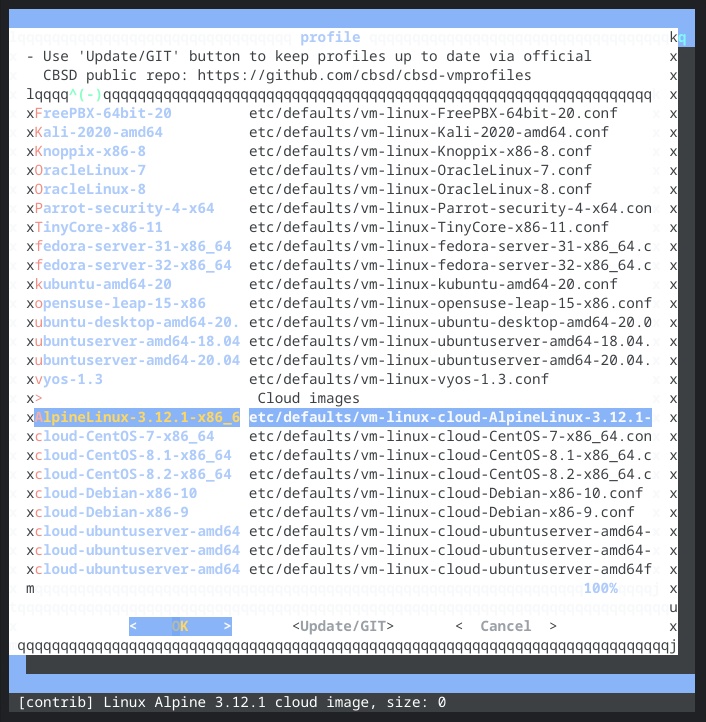

Again, I have no idea if the above steps actually created a bootable image which CBSD will accept, but before I can try, I have to create the cloud VM profile’s configuration.

I have no idea what variables or custom parameters Alpine uses or supports for cloud-init and since I’ve been combing through CBSD and other docs all day, I would just as soon skip digging into cloud-init. I copied over the CBSD configuration file for a cloud Ubuntu VM profile in ~cbsd/etc/defaults and edited that.

Also, I could not find it documented, but the cloud-init template files used to populate VMs are stored in /usr/local/cbsd/modules/bsdconf.d/cloud-tpl. The Debian cloud VM I made earlier used the centos7 templates, so I will try those with Alpine.

Here’s the VM profile I create as /usr/cbsd/etc/defaults/vm-linux-cloud-AlpineLinux-3.12.1-x86_64-standard.conf:

And then I create the new VM using that profile.

The good news: CBSD did indeed import the disk file. The bad news: bhyve couldn’t start the VM.

Ok, that’s a little weird… There are four raw device files instead of the one the other cloud images have.

OOOOOOOOOkay. I copied alpine1’s entire virtual disk, which includes the partition table, EFI partition, and swap partition, in addition to the system partition. I’m not sure how CBSD’s import ended up breaking out the partitions, but either way, we only care about the system data, not EFI or swap. We’ll need to extract the root partition into its own raw file.

Great Image Bake-Off, Round 2

I need to get the contents of the linux-data partition out of the vhd file. There are a few ways to do this, but the simplest way is to create memory disk so we can access each partition through a device file. First we need to get the vhd contents into a regular file, because mdconfig cannot work from the character special device in the ZFS /dev/zvol tree.

mdconfig created /dev/md0 for the entire virtual hard disk, and because the vhd had a partition table, the md driver (I assume) also created device files for each partition. I just want the third partition, so I need to read from /dev/md0p3.

Cloud Seeding, Round 2

We now have alpine1’s root file system in raw format. I copy that file to cbsd-cloud-cloud-AlpineLinux-3.12.1-x86_64-standard.raw and try to create my VM again.

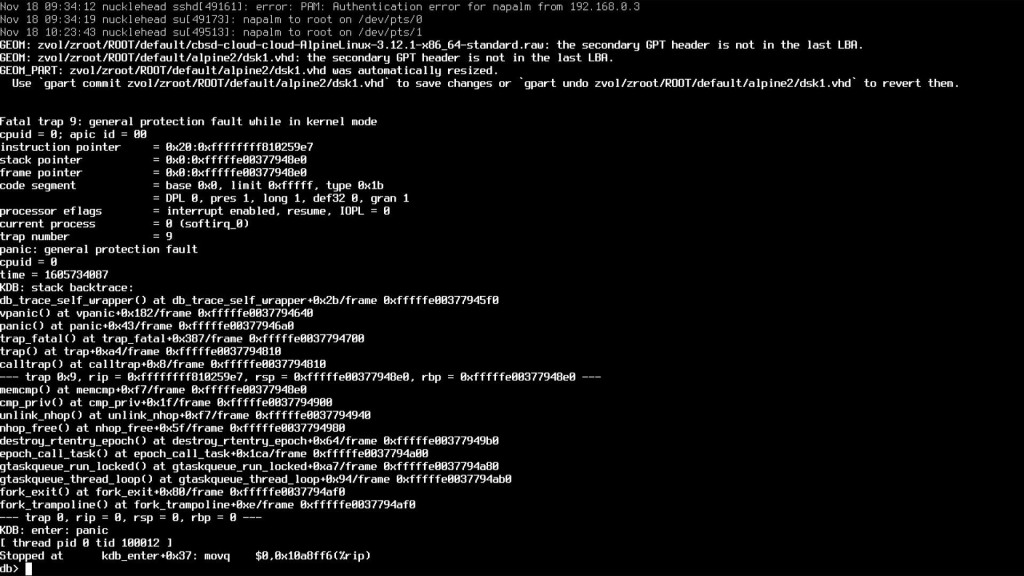

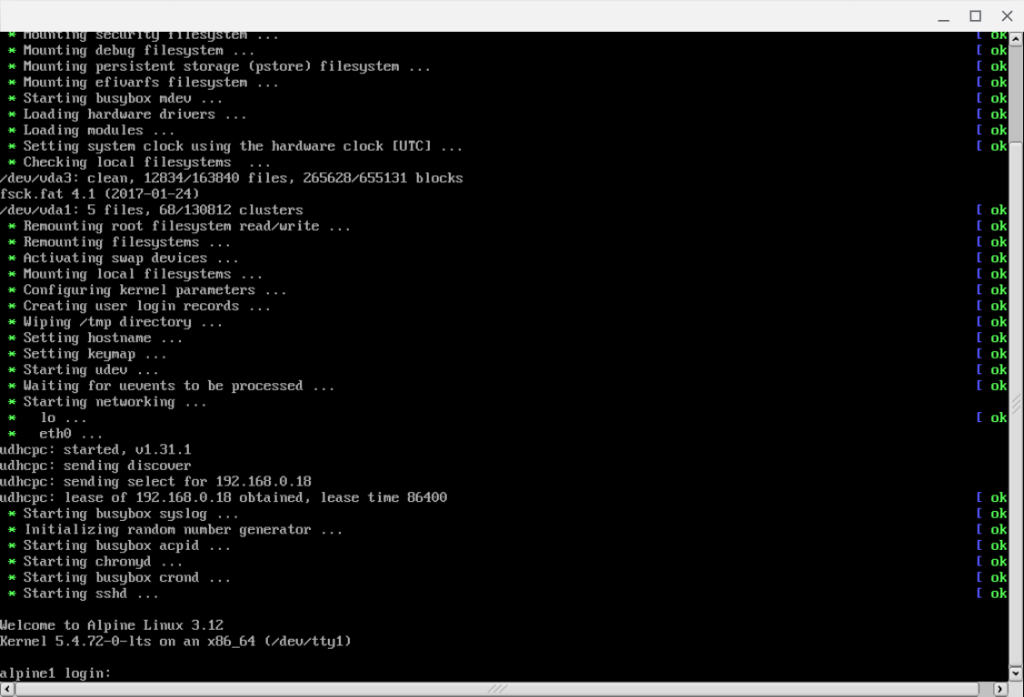

It started without error! CBSD once again automatically handles importing the raw file I just dropped into the src/iso directory. Now we need to see if it actually booted via VNC. I opened the VNC client and… it timed out trying to connect. I go back to the terminal window with the ssh session to my NUC and… that’s hanging. So, I connect my HDMI capture dongle to the NUC, and see FreeBSD had panicked.

But when the NUC rebooted, it brought up the alpine2 VM without any obvious issues, and I could connect to the console. I don’t know why.

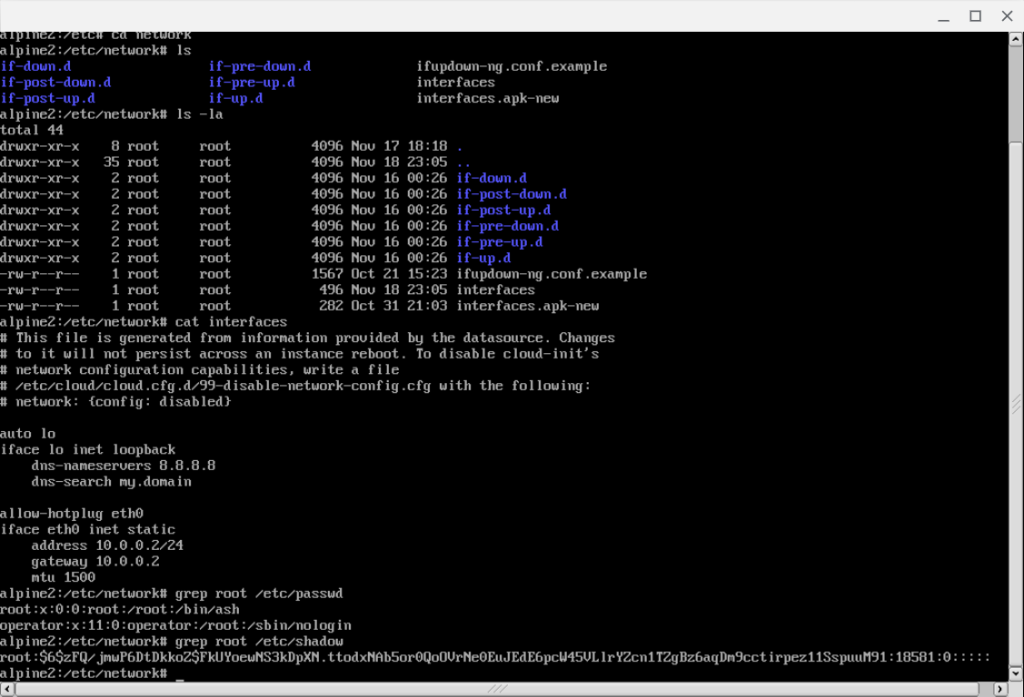

However, the new alpine2 VM still thinks it’s alpine1 and did not apply any of the other cloud-init configurations. And even if cloud-init had run successfully, I couldn’t really count it as much of a win if causing the host to panic is part of the deal.

But I’ll worry about the panic problem later. First, I want to diagnose the cloud-init issue. If I run openrc --service cloud-init start in my VM, cloud-init runs and successfully reconfigures tthe hostname to alpine2. It also rewrites /etc/network/interfaces with the static IP address I had assigned, and… ok, it did not use my password hash for root. Meh, I’ll still consider that a win.

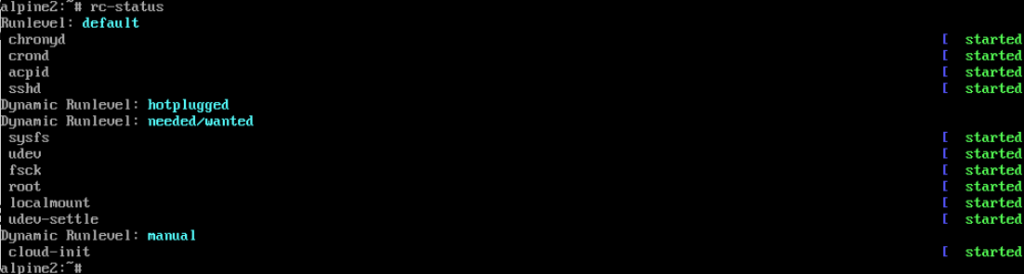

Ok, I’ll need to go back to my source VM and run rc-update add cloud-init default to run cloud-init at boot, then make a new raw image. But I should also figure out what may have caused the FreeBSD kernel panic.

After I rebooted the NUC, I saw similar GPT-related messages for the other cloud raw ZFS volumes in the dmesg log.

Ok, fine, I should check to see what how the disks in the CBSD-distributed raw cloud images are laid out.

Ok, the CBSD-distributed raw cloud files are in fact full disk images, including partition table and other partitions, with no consistency between the number, order, or even types of partitions. So I apparently was overthinking when I decided I needed to extract the root data partition.

I update the alpine1 image in a non-cloud VM to set cloud-init to run at boot, and then it’s time to try again to create a raw image that won’t cause a kernel panic.

Great Image Bake-Off Finals

I’m not sure what else to try to get a safe image or even if I hadn’t missed something the first time I tried to copy the source ZFS volume contents to a raw file. So, I figure I may as well try that again with my updated raw image. I remove the artifacts of the previous attempt and try again.

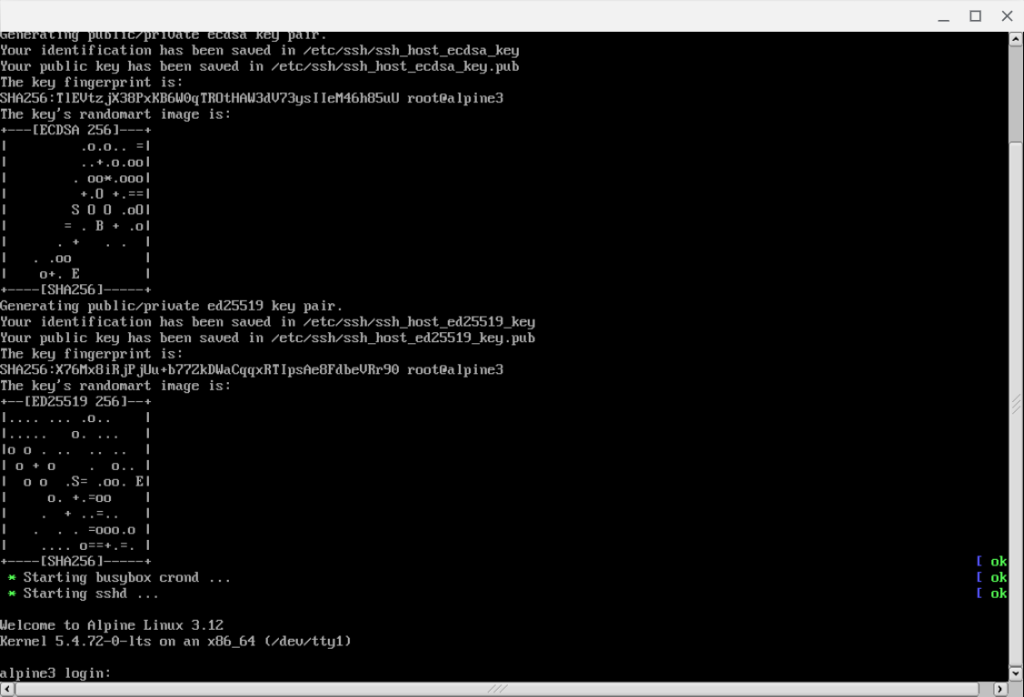

Then I run cbsd bconstruct-tui again with the same settings (except for details like hostname and IP addresses) and start the VM.

It starts, it boots, cloud-init runs, and as a bonus, no FreeBSD kernel panic! (No, my password hash for the root user did not get applied this time, either, and I think /etc/network/interfaces gets written with the correct information because I used the Centos template, but Alpine does not use the same network configuration method. But do you really want this post to get longer so you can watch me debug that?)

That took a lot of trial and error, even after I omitted a few dead-ends and lots and lots of web searches.

In the next part, I will actually live up to the original premise of this series and start doing real Kubernetty things that involve FreeBSD.